Satisfying REACH Requirements in Predictive Toxicology

The OpenTox Workshop "Satisfying REACH Requirements in Predictive Toxicology" will take place 10-11 September 2009 at the Istituto Superiore di Sanità, Rome, Italy. Participants at the workshop will involve cross-industry users and regulatory experts in addition to solution developers and providers who will discuss the requirements and use cases of users in chemical toxicology evaluation and risk assessment, and including satisfaction of current and future requirements of the REACH legislation using alternative testing methods. The workshop format involves short presentations to provide user, regulatory and solution needs perspectives, followed by knowledge cafe discussions discussing use cases, challenges, issues, solutions, collaboration opportunities and ways forward in small groups.

Contact:

If interested in participating in the workshop, please contact:

Barry Hardy (Barry.Hardy -(at)- douglasconnect.com).

Program:

Thursday 10 September

09.00 Welcome, Romualdo Benigni (ISS, Italy)

09.05 Introduction & Workshop Overview, Barry Hardy (OpenTox and Douglas Connect, Switzerland)

09.15 The Use of Computational Methods for the in silico Prediction of Chemical Properties in REACH, Andrew P Worth (Systems Toxicology Unit, Institute for Health and Consumer Protection, European Commission -Joint Research Centre, Italy)

09.40 Automated Workflows for Hazard Assessment, Elena Fioravanzo (S-IN Soluzioni Informatiche, Italy)

10.05 OpenTox Predictive Toxicology Use Cases, Barry Hardy (OpenTox and Douglas Connect, Switzerland)

10.30 Coffee Break

11.00 Knowledge Ca fé Discussions

fé Discussions

12.30 Group Discussion

13.00 Lunch

14.00 Opportunities for using Systems Biology Data for in silico Modeling of Personalized Medicine, Richard D. Beger (Division of Systems Toxicology, National Center for Toxicological Research, Food and Drug Administration, USA)

14.25 A Framework for Using Structural, Reactivity, Metabolic and Physicochemical Similarity to Evaluate the Suitability of Analogs for SAR-based Toxicological Assessments, Joanna Jaworska (Procter & Gamble, Modeling & Simulation, Biological Systems, Brussels Innovation Center, Belgium)

14.50 Integrated Assessment Tools for Environmental Risk Assessment, Willie Peijnenburg (National Institute for Public Health and the Environment, The Netherlands)

15.15 Prediction of Animal Toxicity Endpoints for ToxCAST Phase I Compounds using a Combination of Chemical and Biological in vitro Descriptors, Alex Tropsha (Carolina Center for Computational Toxicology and Carolina Environmental Bioinformatics Research Center, University of North Carolina at Chapel Hill, USA)

15.40 Coffee Break

16.00 Knowledge Café Discussions

17.30 Group Discussion

18.00 Close

Friday 11 September

09.00 Predictive Toxicology for Cosmetics - An Industry Perspective, Stephanie Ringeissen (Safety Research Department, L’Oréal R&D, France)

09.25 OECD (Q)SAR Application Toolbox: Progress and Ongoing Developments, Bob Diderich (OECD, Paris, France)

09.50 Italian Specialty Chemical Industry Perspective on Predictive Toxicology, Maurizio Colombo (Health & Safety Evaluation, Industrial Regulation Management, Lamberti S.p.A., Italy)

10.15 Coffee Break

10.45 Knowledge Café Discussions

12.00 Group Discussion

12.30 Lunch

13.30 The Italian Chemical Industry and the REACH impact on SMEs, Antonio Conto (Chemsafe Srl, Italy)

13.55 Classification- and Regression-Based QSAR of Chemical Toxicity on the Basis of Structural and Physicochemical Similarity, Oleg Raevsky (Department of Computer-Aided Molecular Design, Institute of Physiologically Active Compounds of Russian Academy of Sciences, Moscow, Russia)

14.20 Analysis of Toxcast Data and in vitro / in vivo Endpoint Relationships, Romualdo Benigni (Istituto Superiore di Sanità, Italy)

14.45 Coffee Break

15.15 Knowledge Café Discussions

16.30 Group Discussion

17.00 Close

Abstracts

The Use of Computational Methods for the in silico Prediction of Chemical Properties in REACH

Andrew P Worth (Systems Toxicology Unit, Institute for Health and Consumer Protection, European Commission -Joint Research Centre, Italy)

To promote the availability of reliable computer-based estimation methods for the regulatory assessment of chemicals, including industrial chemicals under the scope of REACH, the European Commission’s Joint Research Centre (JRC) has been developing a range of user-friendly and publicly accessible software tools.

Toxtree predicts various kinds of toxic effect by applying decision tree approaches. The set of decision trees currently includes the Cramer classification scheme, the Verhaar scheme, the BfR rulebases for irritation and corrosion, and the Benigni-Bossa scheme for mutagenicity and carcinogenicity, and the START rulebase for persistence and biodegradability. New rulebases can easily be developed and incorporated.

Toxmatch generates quantitative measures of chemical similarity. These can be used to compare datasets and to calculate pairwise similarity between compounds. Consequently, Toxmatch can be used to compare model training and test sets, to facilitate the formation of chemical categories, and to support the application of read-across between chemical analogues. DART (Decision Analysis by Ranking Techniques) was developed to make ranking methods available to scientific researchers. DART is designed to support the ranking of chemicals according to their environmental and toxicological concern and is based on the most recent ranking theories.

Finally, the JRC is developing a web-based inventory of (Q)SAR models (the JRC QSAR Model Database) which will help to identify relevant (Q)SARs for chemicals undergoing regulatory review. The JRC QSAR Model Database provides publicly-accessible information on QSAR models and will enable any developer or proponent of a (Q)SAR model to submit this information by means of a QSAR Model Reporting Format (QMRF). The in silico tools developed by the JRC are freely available at (http://ecb.jrc.ec.europa.eu/qsar/).

In the REACH guidance on the assessment of chemicals, it is recommended that these and other tools should be used in a stepwise (tiered) approach. This presentation makes reference to key aspects of the REACH guidance and explains how the hazard of chemicals can be assessed by using multiple computational methods within the overall framework of a tiered non-testing approach.

References

Bassan A, Worth AP (2008) The Integrated Use of Models for the Properties and Effects of Chemicals by means of a Structured Workflow. QSAR & Combinatorial Science 27, 6-20

ECHA (2008) Guidance on Information Requirements and Chemical Safety Assessment. European Chemicals Agency, Helsinki, Finland. Available at: http://reach.jrc.it/docs/guidance_document/information_requirements_en.htm/

Gallegos-Saliner A, Poater A, Jeliazkova N et al (2008) Toxmatch – A Chemical Classification and Activity Prediction Tool based on Similarity Measures. Regulatory Toxicology and Pharmacology 52, 77-84.

OECD (2007) Guidance Document on the Validation of (Quantitative) Structure Activity Relationship [(Q)SAR] Models. OECD Series on Testing and Assessment No. 69. ENV/JM/MONO(2007)2. Organisation for Economic Cooperation and Development, Paris, France. Available at: http://www.oecd.org/

Patlewicz G, Jeliazkova N, Safford RJ et al (2008) An evaluation of the implementation of the Cramer classification scheme in the Toxtree software. SAR QSAR Environ. Res. 19, 397– 412

Pavan M, Worth A (2008) Publicly-accessible QSAR software tools developed by the Joint Research Centre. SAR QSAR Environ Res 19, 785-799.

Worth A, Patlewicz G eds. (2007) A Compendium of Case Studies that helped to shape the REACH Guidance on Chemical Categories and Read Across. European Commission report EUR 22481 EN. Office for Official Publications of the European Communities, Luxembourg. Available at: http://ecb.jrc.it/qsar/publications/

Worth A, Bassan A, Fabjan, E et al (2007). The Use of Computational Methods in the Grouping and Assessment of Chemicals - Preliminary Investigations, European Commission report EUR 22941 EN, Office for Official Publications of the European Communities, Luxembourg, 2007. Available at: http://ecb.jrc.it/qsar/publications/

Automated Workflows for Hazard Assessment

Elena Fioravanzo, Arianna Bassan and Matteo Stocchero (S-IN Soluzioni Informatiche Via G. Ferrari 14, Vicenza, Italy)

In the regulatory framework of REACH there is an urgent need for valid, reliable and accurate in silico approaches; importantly there is also an urgent need for integrated tools that allow users to generate adequate predictions. This perspective focuses on the possible use of non-testing methods in the regulatory assessment of chemicals; the use of different tools for generating adequate predictions will be discussed.

A single in silico prediction model may provide acceptable results. As by definition all models are simulations of reality and will therefore never be completely accurate, sometimes a single model will not work. There is a large degree of overlap between different predictions, but there are compounds that are accurately classified by one method and not by other one. This difference enables us to combine predictions applying a consensus approach.

We implement a consensus-like approach by means of a pipelining technology, KNIME, that allows us to integrate a number of models that may be useful for the problem under investigation. KNIME is a popular modular data exploration platform that enables the user to visually create data flows (often referred to as pipelines); it selectively executes some or all analysis steps, and later it allows the user to investigate the results through interactive views of data and models. We will discuss workflows that allow the user to easily predict a new compound according to different classification schemes, going through structure preparation, prediction and prediction validation, and final classification.

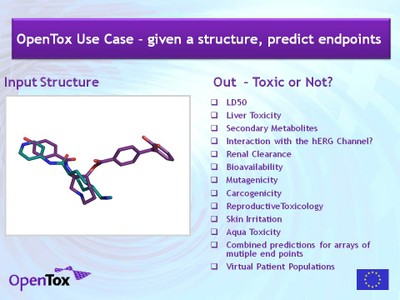

OpenTox Predictive Toxicology Use Cases

Barry Hardy (OpenTox and Douglas Connect, Switzerland)

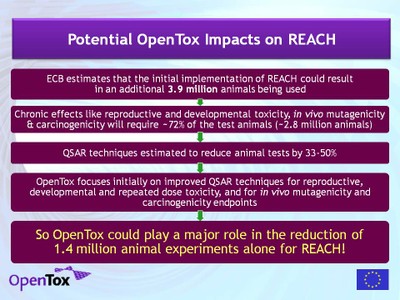

The EC-funded FP7 project “OpenTox” is developing an Open Source-based integrating predictive toxicology framework that provides a unified access to toxicological data and (Q)SAR models. OpenTox provides tools for the integration of data, for the generation and validation of (Q)SAR models for toxic effects, libraries for the development and integration of (Q)SAR algorithms, and scientifically sound validation routines. OpenTox supports the development of applications for non-computational specialists in addition to interfaces for risk assessors, toxicological experts and model and algorithm developers.

OpenTox is relevant for the implementation of REACH as it allows risk assessors to access experimental data, (Q)SAR models and toxicological information from a unified interface that adheres to European and international regulatory requirements including OECD Guidelines for validation and reporting. The OpenTox framework is being populated initially with data and models for chronic, genotoxic and carcinogenic effects. These are the endpoints where computational methods promise the greatest potential reduction in animal testing required under REACH. Initial research has defined the essential components of the framework architecture, approach to data access, schema and management, use of controlled vocabularies and ontologies, web service and communications protocols, and selection and integration of algorithms for predictive modeling.

This perspective will present the following use cases that have been documented for OpenTox service and application development:

- (Q)SAR-based predictive toxicology prediction and reporting for a compound.

- Validation of a (Q)SAR-based predictive toxicology model.

- Integration of multiple data sources and resources for a predictive toxicology model.

- Service-based Collaborative Workflow for combined in silico in vitro Predictive Toxicology Applications using Distributed Resources

The use cases are presented with the intent to seed their discussion and criticism during the succeeding Knowledge Café discussions. Discussion of the use cases can include analysis for REACH-relevant risk assessment, chemical categorization and prioritisation, and chemical and food safety evaluation. The outcome of the discussions should help provide guidance for service development for satisfying the user requirements identified.

Opportunities for using Systems Biology Data for in silico Modeling of Personalized Medicine

Richard D. Beger (Branch Chief, Center for Metabolomics, Division of Systems Toxicology, National Center for Toxicological Research, Food and Drug Administration, Jefferson, AR 72079-9502, USA)

Systems Biology with the advent of the ‘omics’ technologies has the ability to provide better evaluation tools and models for diseases, better identification and quantification of safety and mode of action biomarkers, and will increase the capabilities of personalized medicine. Using the information provided by these technologies presents both a challenge and opportunities for in silico modeling. MAQC IV is going to evaluate in silico methods for predicting personalized adverse drug reactions and efficacy via drug-protein interactions. But modeling genetics-drug interactions alone may not provide all the information needed for evaluation of gene-environment issues that may be required to fully define personalized medicine. Metabolites are connected to a patient’s genotype and disease to health status but also environmental factors like nutrition, gut microflora, drugs, age and exercise. Metabolomics data from biofluid and tissue sample analysis has information about the patient’s genotype and phenotype. Metabolomics data can be rapidly collected on biofluid samples over time providing temporal metabolic analyses. Examples of how metabolomics can provide efficacy, safety, and pharmacokinetic information on a patient’s phenotype will be provided. Recently polypharmacy has been associated with adverse events and modeling polypharmacy represents a complex but potentially important issue that can be used in personalized medicine decisions.

A Framework for Using Structural, Reactivity, Metabolic and Physicochemical Similarity to Evaluate the Suitability of Analogs for SAR-based Toxicological Assessments

Joanna Jaworska (Procter & Gamble, Modeling & Simulation, Biological Systems, Brussels Innovation Center, Temselaan 100, 1853 Strombeek-Bever, Belgium)

A systematic expert-driven process applied in Procter & Gamble is presented for evaluating analogs in read across for use in SAR (structure activity relationship) toxicological assessments. The approach involves categorizing potential analogs based upon their degree of structural, reactivity, metabolic and physicochemical similarity to the chemical with missing toxicological data (target chemical). It extends beyond structural similarity, and includes differentiation based upon chemical reactivity and addresses the potential that an analog and target could show toxicologically significant metabolic convergence or divergence. In addition, it identifies differences in physicochemical properties, which could affect bioavailability and consequently biological responses observed in vitro or in vivo. The result is a comprehensive framework to apply chemical and biochemical expert judgment in a systematic manner to identify and evaluate factors that can introduce uncertainty into SAR assessments, while maximizing the appropriate use of all available data. I will identify needs regarding computational toxicology methods and chemoinformatic tools to improve this read-across process.

Integrated Assessment Tools for Environmental Risk Assessment

Willie Peijnenburg (National Institute for Public Health and the Environment, The Netherlands)

The use of non-testing methods is strongly advocated within REACH. Up till now, however, clear guidance on how to apply non-testing methods is lacking. Also, guidelines and recommendations on how to deal with non-testing methods are lacking for the majority of endpoints relevant for risk assessment. This is due mainly to the wide diversity of tools that are potentially available, the lack of sufficient structured data to allow for thorough validation and method development, and the lack of methods that allow for extrapolation of laboratory-obtained in vitro test results to in vivo organisms under realistic exposure conditions.

Progress will be discussed with regard to the development of a decision support tool that is aimed at integrating the environmental risk assessment of four classes of environmentally relevant substances. As a first step, relevant data and relevant QSAR models were screened based upon literature and database surveys. Results obtained are illustrative for the amount of data and models in general available for risk assessment purposes, and data needs both in terms of relevant endpoints and in terms of necessity for future application in read across will be demonstrated.

Prediction of Animal Toxicity Endpoints for ToxCAST Phase I Compounds using a Combination of Chemical and Biological in vitro Descriptors

Hao Zhu, Liying Zhang, Ivan Rusyn, and Alexander Tropsha (Carolina Center for Computational Toxicology and Carolina Environmental Bioinformatics Research Center, University of North Carolina at Chapel Hill, Chapel Hill, NC 27599, U.S.A.)

A wealth of available biological data such as those from ToxCast Phase I requires new computational approaches to link chemical structure, short-term bioassay results, and chronic in vivo toxicity responses. We advance the predictive QSAR modeling workflow that relies on effective statistical model validation routines and implements both chemical and biological (i.e., in vitro assay results) descriptors of molecules to develop in vivo chemical toxicity models. We have developed two distinct methodologies for in vivo toxicity prediction utilizing both chemical and biological descriptors. In the first approach, we employ biological descriptors directly in combination with chemical descriptors to build models. Obviously, this approach requires the knowledge of biological descriptors to make toxicity assessment for new compounds. Our second modeling approach employs the explicit relationship between in vitro and in vivo data as part of the two-step hierarchical modeling strategy. First, binary QSAR models using chemical descriptors only is built to partition compounds into classes defined by patterns of in vitro – in vivo relationships. Second, class specific conventional QSAR models are built, also using chemical descriptors only. Thus, this hierarchical strategy affords external predictions using chemical descriptors only. We will present the results of applying both strategies to ToxCast Phase I and similar data. Our studies suggest that utilizing in vitro assay results as biological descriptors afford prediction accuracy that is superior to both the conventional QSAR modeling that utilizes chemical descriptors only or in vivo effect classifiers based on in vitro biological response only. We will discuss how our models are being used to prioritize compound selection for ToxCast Phase II studies.

Predictive Toxicology for Cosmetics - An Industry Perspective

Stephanie Ringeissen (Safety Research Department, L’Oréal R&D, France)

Interest in computer-based predictive toxicology is growing rapidly in the cosmetics industry to comply with new legislations, sustain innovation and generate non-testing information for regulatory decision making. Lessons we learnt in the past years will be presented with case-studies showing the benefits of entering the new paradigm in toxicology and of working with a multi-disciplinary team of biologists, toxicologists, chemists & developers.

First, the surrounding environment in which computational tools are developed is a key element. By surrounding environment, we mean the possibility to interact closely with:

- developers of software: building mutual trust between end-users & developers is critical to warrantee a beneficial exchange of feedback & expertise. Software companies providing high-quality customer support are favoured; Case-study: refinement of alerts in Derek for Windows and Toxtree;

- in-house & external experts in biology/toxicology who can ensure a sound use of toxicity data used to populate databases and develop predictive models;

- end-users such as safety assessors who use predictions for regulatory purposes & therefore have specific requirements. Case-study: development of a model to predict the severity of skin irritancy (and not just EU risk phrases for labelling) to answer safety assessors needs.

Secondly, the transparency of computational tools is another key parameter. Transparency in terms of:

- Sources of data found in databases or included in the training sets of the models;

- Experimental conditions in which data have been generated;

- Mechanistic rationale underlying a model.

We do not need “Black-box” tools but tools which comply with regulatory constraints (OECD Principles, REACh legislation, ECHA guidelines, etc) and provide information on potential modes of action of toxicants.

Thirdly, the adequacy between industrial needs and the one provided by computational tools has to be improved in terms of:

- applicability domain (compound or chemical classes covered – drugs vs food ingredients vs cosmetics ingredients); Case-study: A study comparing performances of various models at forecasting the rodent carcinogenicity of a public set of 50 natural chemicals.

- Target endpoints (hazards but also ADME properties for risk assessment); Case-study: oxidation of fragrance terpenes upon air exposure.

Last, the integration of computational predictive methods with experimental assays in flexible platforms is needed in order to:

- Allow a full characterization of compounds in terms of toxicity and physico-chemical profiles;

- Share knowledge and facilitate the interpretation of results.

This will enable ITS (Integrated Testing Strategies) to be optimized & used effectively for safety & risk assessment.

OECD (Q)SAR Application Toolbox: Progress and Ongoing Developments

Bob Diderich, Terry Schultz, Michihiro Oi (OECD, Paris, France)

In March 2008, the OECD released a first version of the (Q)SAR Application Toolbox [www.oecd.org/env/existingchemicals/qsar] for free download. The main feature of the Toolbox is to allow the user to group chemicals into toxicologically meaningful categories and to fill data gaps by read-across and trend analysis. The Toolbox contains:

- databases with results from experimental studies,

- tools to estimate missing experimental values by read-across, i.e. extrapolating results from tested chemicals to untested chemicals within a category,

- tools to estimate missing experimental values by trend analysis, i.e. interpolating or extrapolating from a trend (increasing, decreasing, or constant) in results for tested chemicals to untested chemicals within a category, and

- a library of QSAR models.

The second phase of the development of the Toolbox started in October 2008 and will last until October 2012, with version 2.0 scheduled to be released in October 2010 and version 3.0 scheduled to be released in October 2012. The aim of the further development of the Toolbox is to extend its functionalities to ensure that the categories approach to filling data gaps works uniformly for all discrete organic chemicals and for all regulatory endpoints. The most challenging aspect of the further development is to develop categorisation mechanisms for complex regulatory endpoints like repeat dose toxicity, reproductive toxicity or developmental toxicity.

Industry Perspective on REACH and Predictive Toxicology Requirements

Maurizio Colombo (Health & Safety Evaluation, Industrial Regulation Management, Lamberti S.p.A., Italy)

For many years predictive toxicology was only a strange term for the chemical industry in Europe. For all regulatory purposes such as new chemical notification, risk assessment, and safety data sheet requirements, only in vivo and in vitro toxicology approaches were permitted; therefore the interest of chemical industries to develop knowledge and experiences on alternative in silico approaches was limited. There were a couple of exceptions in the pharmaceutical industries and in the case of registration in the USA of new chemicals. Under the Toxic Substances Control Act (TSCA) rules, the use of predictive (eco)toxicology was common to define some chemical-physical and ecotoxicological properties.

In any case Italian industry was interested in predictive toxicology with a few initiatives which were generally driven by the European Chemical Industry Council (CEFIC) and other European Associations.

The change in regulatory rules written in the first drafts of the REACH regulation was immediately understood by industries and different initiatives were started in order to provide general information to the industries and to develop new tools and systems for the better use of predictive toxicology methods.

Our experience in Italy is based on task force activities within Federchimica on the following items:

- to have a complete information on the different activities in the European Union;

- to contact the Italian centers where predictive toxicology is used and developed;

- to organize activities in order to prepare industry specialists.

Our activities were carried out involving different researchers in Italy, starting with examples of use of predictive toxicological tools for chemical group substances, and proposing original works with specific molecules.

We have a couple of issues to develop: the first one is to fortify the use of predictive toxicology in regulatory contexts. We receive different messages from the Authorities about an extensive use of it, we know the difference with the US system where there is a predictive toxicology tool adopted by the Authority, while in the EU the situation is not yet clear about tools and systems.

The second issue is to convince chemical industries in the Italian association or also in the Consortia formed under REACH to use predictive toxicology methods. For that we support the creation of “certified” organizations which work in this area of “good laboratory practices” development.

The Italian Chemical Industry and the REACH impact on SMEs

Antonio Conto (Chemsafe Srl, via Ribes 5, 10010 Colleretto Giacosa (TO), Italy)

The Italian chemical production (57.4 billion €) is at the third ranked position within the global European production after Germany (135,9 Billion €) and France 78.1 Billion €) and before UK (55.5 billion €) and Benelux (54.6 billion €)1. The chemical production in Italy represents about 6% of the whole manufacturing production with a direct employment of 126,000 people and a total of 378,000 employees. The Italian chemical production takes place in all sectors which can be divided into three main groups:

- 17.5% consumer chemistry (i.e., perfums, cosmetics, detergents, soaps)

- 45.7% basic chemistry and fibers (plastics and rubbers, petrochemistry, gases and fibers)

- 36.8% Fine chemistry and specialties (agrochemicals, APIs, varnishes, inks)

The Italian chemical companies are distributed in terms of size and turnover as follows2:

- 41% Small-medium enterprises (SME)

- 36% Multinational companies

- 23% medium-large Italian companies

If we consider that only 28 companies/groups are large Italian organization, we conclude that most of the chemical companies in Italy are SMEs (< 250 employees). The ratio of SMEs in Italy is therefore higher that the European one which is about 38%.

The REACH regulation imposes to all actors (manufacturers, importers, downstream users) a number of actions in order to register substances (itself, in preparation, in articles). In general terms, the financial burden to comply to REACH regulation is high even if costs may be shared in Consortia or within the SIEF. This is particularly critical for Italian SMEs. Furthermore the SMEs are in many cases family owned, without a good regulatory background and having few possibilities to understand the complex REACH approach. In other cases SMEs are only traders which import EINECS chemicals from non-EU countries. They never faced regulatory and registration matters prior to the application of the REACH regulation and they do not have the cultural tools to be prepared to register.

The most important problem is, in any case, the financial one as many of these companies possess a huge substance portfolio; therefore costs are multiplied for the number of substances and, in our opinion, cannot be afforded on the whole.

Italian SMEs hence need to be supported in two ways:

- increase their consciousness concerning the knowledge of the REACH system

- help them in decreasing the cost for registration

The first point can be reached by increasing the training to company personnel or to consult with them strictly on all actions to be done. The second point is more difficult and can only be successful through reducing animal testing. In this perspective the application of predictive in silico models which may help such companies in avoiding animal testing or at least to focus the testing on appropriate studies to reduce the final cost of the registration is presented.

References

1. Cefic, Federchimica, year 2008.

2. Federchimica 2007.

Classification- and Regression-Based QSAR of Chemical Toxicity on the Basis of Structural and Physicochemical Similarity

O.A. Raevsky, V.Ju. Grigor’ev, E.A. Modina, O.E. Raevskaja, Ja.V. Liplavskiy (Department of Computer-Aided Molecular Design, Institute of Physiologically Active Compounds of Russian Academy of Sciences, 142432, Chernogolovka, Moscow region, Russia)

Objectives. REACH requires the submission of costly test data for each chemical produced or imported, which are currently available for only a small percentage of chemicals. REACH is not intended to cause a large expansion of animal testing in order to meet the registration requirements. The alternative under REACH is to fill data gaps by estimating the missing data from the same data for similar chemicals using QSAR/QSPR techniques.

Purpose of the study: development of new predictive QSAR algorithms for chemical toxicity to different organisms.

Methodology platform: combination of structural and physicochemical similarity concepts with regression analysis.

Approaches:

- Classification by nearest structure neighbour(s) & calculation of additional toxicity contribution by physicochemical descriptors (CCT model) [1-3];

- Arithmetic Mean Toxicity (AMT model),[first presented results];

- Separate Clusterization (SC model),[first presented results];

- Super Overlapping Clusterization (SOC model),[first presented results].

Original software:

- HYBOT (HYdrogen BOnd Thermodynamics) [4],

- SLIPPER (Solubility, LIPophilicity, PERmeability) [6].

Objects:

- Tetrahymena Pyriformis (1300 chemicals), [first presented results];

- Guppy, Fadhead Minnow, Rainbow Trout ( 400) [3,7,8];

- Daphnia Magna (own data for 450 chemicals), [9];

- Mouse (3300 chemicals, intravenous injection), [first presented results];

- Rat (430 chemicals, intravenous injection and 420 chemicals inhalation), [first presented results].

Main conclusion: The use of the CCT model with application of “transport” descriptors calculated by program HYBOT gives a possibility to calculate aquatic chemicals toxicity very well. Thus in the case of chemical toxicity to Tetrahymena Pyriformis a training set containing chemicals with nonpolar narcosis mode of action (MOA) is described by regression equation with next statistic criteria: n=714, r2=0.94, sd=0.23. Test set containing chemicals with all other MOA has next statistics; n=589, r2=0.88, sd=0.37. Obviously such results reflect essential predominance of nonspecific interactions of chemicals with aquatic organisms.

Mammalian toxicity QSAR models have to be much complex and use not only 2D but also 3D descriptors to take different specific toxicity mechanisms into account. Nevertheless models AMT, SC and SOC even in the case of application of 2D descriptors calculated by HYBOT and DRAGON programs permitted to estimate obvious tendencies in a change of mammalian toxicity (r2=0.60-0.70, and sd ≈ ±0.50). It is needed to consider such values as enough good results because the used data sets included toxicity data for chemicals with the same type of injection in organism but contained objects with different sex, age and strain. So the mean error of experimental log 1/LD50 determination is at least on the level ±0.50. It is proposed that the models developed for mammalian toxicity could be used to support the registration of certain chemicals under REACH, either by replacing the need for animal testing or by increasing confidence in read-across assessments.

Nearest work: Development of above-mentioned QSAR models on the basis of different 2D and 3D physicochemical descriptors and the application of those models to chemical toxicity to mouse and rat (transperitoneal, subcutaneous injection and oral administration).

Acknowledgements: This investigation was supported by the International Science & Technology Centre (ISTC projects № 888 and 3777).

References:

[1] O.A.Raevsky, Molecular Lipophilicity Calculations of Chemically Heterogeneous Chemicals and Drugs on the Basis of Structural Similarity and Physicochemical Descriptors, SAR & QSAR in Environmental Research, 2001, 12, 367-381.

[2] O.A.Raevsky, Physicochemical Descriptors in Property-Based Drug Design, Minireview in Medicinal Chemistry, 2004, 4, 1041-1052.

[3] O.A.Raevsky and J.C.Dearden, Creation of Predictive Models of Aquatic Toxicity of Environmental Pollutants with Different Mechanisms of Action on the Basis of Molecular Similarity and HYBOT Descriptors, SAR & QSAR in Environmental Research, 2004, 15, 433-448

[4] O.A.Raevsky. H-Bonding Parametrization in Quantitative Structure–Activity Relationships and Drug Design. In Molecular Drug Properties. Measurement and Prediction. R.Mannhold (Ed.). Copyright © 2008 Wiley-VCH Verlag GmbH & Co.KGaA, Weinheim ISBN: 978-3-527-31755-4, pp. 127-154, 2008.

[5] V.A.Gerasimenko, S.V.Trepalin, O.A.Raevsky, MOLDIVS - A New Program for Molecular Similarity and Diversity Calculations, in "Molecular Modelling and Prediction of Bioactivity", eds. K.Gundertofe and F.Jorgensen, 2000, Kluwer Academic/Plenum Publ., pp. 423-424.

[6] O.A.Raevsky, S.V.Trepalin, E.P.Trepalina, V.A.Gerasimenko, O.E.Raevskaja, SLIPPER-2001 – Software for Predicting Molecular Properties on the basis of Physicochemical Descriptors and Structural Similarity, J.Chem. Inf. Comput. Sci., 2002, 42, 540-549

[7] O.A.Raevsky, V.Ju.Grigor’ev, J.D.Dearden, E.E.Weber, Classification and Quantification of the Toxicity of Chemicals to Guppy, Fathead Minnow and Rainbow Trout. Part 2. Polar Narcosis Mode of Acction, QSAR & Comb. Sci., Vol. 28, pp. 163-174, 2009.

[8] O.A.Raevsky, V.Ju.Grigor’ev, E.E.Weber J.D.Dearden, Classification and Quantification of the Toxicity of Chemicals to Guppy, Fathead Minnow and Rainbow Trout. Part 1. Nonpolar Narcosis Mode of Acction, QSAR & Comb. Sci., Vol. 27, pp. 1274-1281, 2008.

[9] Rasdolsky A.N., Gerasimenko V.A., Filimonov D.A., Poroikov V.V., Weber E.J., Raevsky O.A., Clusterization of organic compounds according to mode of toxic action on Daphnia Magna on the basis of three different methods, Abstracts of Fourth International Symposium on Computational Methods in Toxicology and Pharmacology Integrating Internet Resources, Moscow, 2007, p. 141.

Analysis of Toxcast Data and in vitro / in vivo Endpoint Relationships

Romualdo Benigni (ISS, Italy)

With the aims of shortening times of toxicity testing, protecting animal health and welfare and saving money, research on the so-called “Three Rs” (Replacement, Reduction and Refinement of animal testing) has been going on for years. Recently, new impetus has been given by the ToxCast project. ToxCast adopts a pathway-based screening, consisting in the analysis of perturbations provoked by chemicals to biochemical and biological pathways supposed to be critical to toxicity. Such perturbations are studied in isolated systems in vitro (both cell-free and cell-based), with the use of modern High-Throughput Screening (HTS) omics techniques.

We analyzed the results of ToxCast Phase 1, mainly in the perspective of the replacement of in vivo assays with in vitro ones. It appears that the in vitro / in vivo correlation is extremely low, or absent, depending on the in vivo toxicity endpoint considered. This evidence is in agreement with findings from other fields, ranging from research on drug design with intensive use of omics technologies to more traditional research on alternative tests for regulatory purposes: isolated systems in vitro - when perturbed by chemicals - respond in a way radically different from how they respond when they are integrated into whole organisms.

From a practical point view, this means that we are still quite far from being able to replace the classical animal toxicity assays with stand-alone in vitro alternatives (first of the “Three Rs”), whereas progress seems closer in terms of the other Rs (Reduction, Refinement).